Pink Floyd, Computers, and Dissecting Music: Breakthroughs in Science

- Dhruti Pattabhi

- Dec 30, 2023

- 2 min read

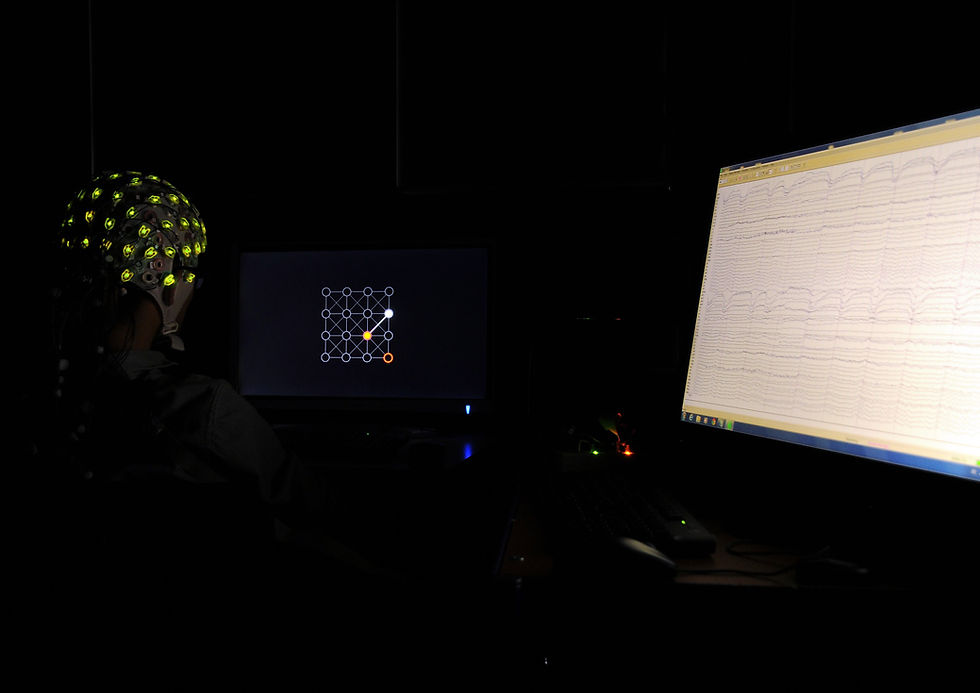

Brain-Computer Interfacing (BCI) is a new method scientists use to map brain signals in real-time (Laurens R. Krol/Wikimedia Commons)

One of the oldest art forms, music remains an integral part of daily life and a critical part of the human experience. Extensive research displays the importance of music on health and overall well-being. In particular, the last decades have seen remarkable progress in understanding how music impacts us at the neural level. However, the exact neurodynamics (communication within the nervous system) involving music perception remains unknown. That’s where Pink Floyd comes in.

Setting the Stage

Earlier this year, researchers were able to decode individual words and thoughts from brain activity. However, neuroscientists at the Albany Medical College and the University of California, Berkeley, have managed to reconstruct a Pink Floyd song from the brain activities of neurosurgical patients.

In what seemed like a scene from a sci-fi movie, 29 epileptic participants donned headphones and electrodes while passively listening to Pink Floyd’s “Another Brick in the Wall.” They were told to simply listen to the music without paying attention to any particular details. Medical electrodes, used to transfer and amplify currents in the body, were placed on patients, helping researchers track brain activity.

The Science Behind the Song Choice

Of course, the question remains: why Pink Floyd? The song “Another Brick in the Wall” is rich in tones, acoustics, and pitches, correlating with a complex auditory stimulus. The song would elicit a diverse neural response inclusive of various brain regions managing high-order music perception.

After the patients had listened to the 1979 rock song, the raw recorded signals were subject to visual analysis. The scientists then removed any electrode signals exhibiting noisy or epileptic activity. Lastly, they used regression-based decoding models—or computer models—to reconstruct the song from the auditory spectrogram.

This study aimed to identify the brain regions that engage in the perception of different acoustic elements. The neuroscientists ultimately observed right-hemisphere involvement for music perception and a possible new superior temporal gyrus region tuned to musical rhythm. They hint at using the results to incorporate musical elements into the Brain-Computer Interface (BCI), a system that translates brain signals into commands.

Future Applications and the International Platform

The BCI allows individuals to control and operate machines with their thoughts. Expanding the BCI by incorporating a larger span of sounds and musical tones can make communication easier for people with brain lesions, paralysis, and other neurological conditions and disabilities. With the rapid integration of novel technologies, the BCI has an anticipated growth of 16.7 percent between 2022 and 2030.

Piece by piece, sound by sound, and vibration by vibration, these researchers have paved the way to incorporating music into a system that may have benefits for years to come. By laying the foundations for future research in auditory neuroscience, their discoveries are more than just “another brick in the wall.”

Sources & Further Reading

https://www.sciencenews.org/article/brain-implant-reads-peoples-thoughts

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3497935/

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10427021/

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3497935/

https://www.precedenceresearch.com/brain-computer-interface-market

https://www.nytimes.com/2023/08/15/science/music-brain-pink-floyd.html

Comments